One of the foundational concepts on which I've built my game engine is the separation of concerns. The game simulation is entirely separate from the rendering, fully agnostic to however it appears to the player and how they interact with it.

Why bother?

Keeping the simulation separate from its visual representation is very powerful, and delightfully conceptually clean. It lets you run the game on your continuous integration (CI) server without needing to faff around with trying to get an OpenGL context on a headless VM. In the same vein, it makes it easy to switch between multiple renderer implementations without any code changes at compile time (or runtime if you really wanted to)1.

Other advantages include the ability to fast forward the simulation at hundreds or thousands of ticks per second, and reusing the same simulation code for client- and server-side in networked games.

Let's get on with it.

Architecture

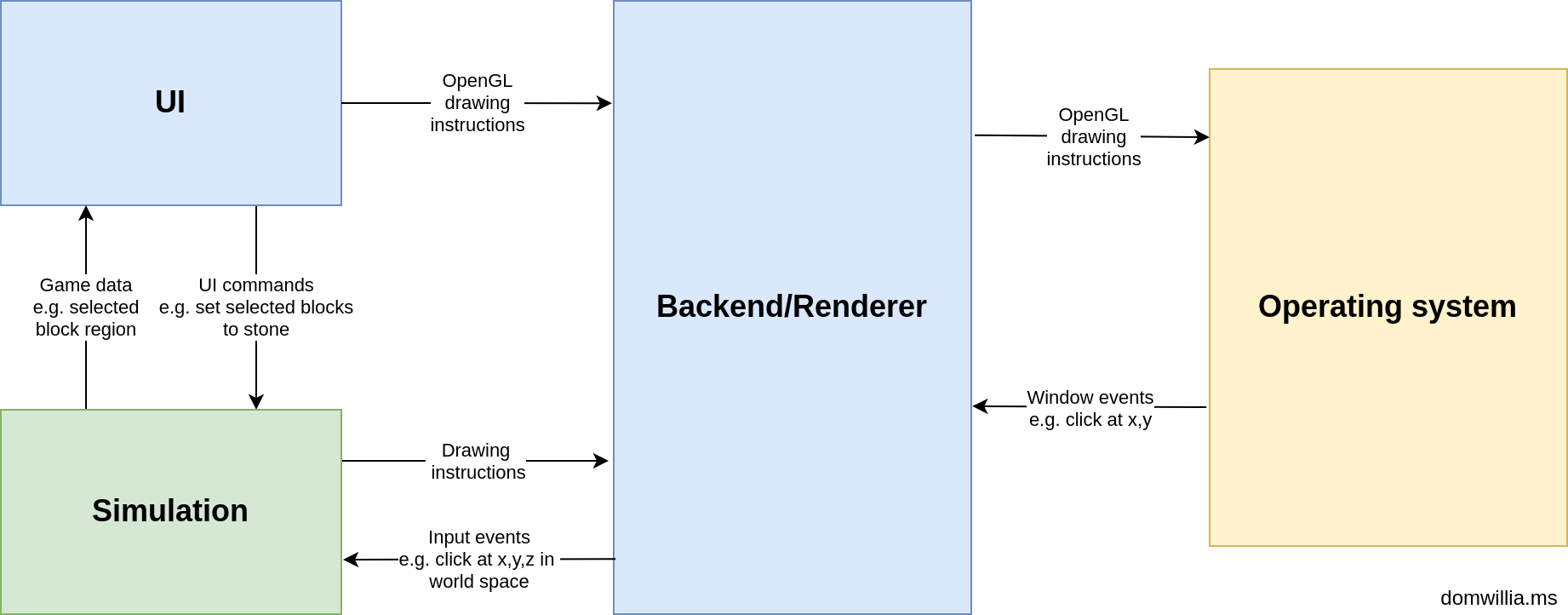

A summary of the engine architecture.

A summary of the engine architecture.

This summarises the architecture of the game engine at a very high level. We'll visit the relevant components in turn, looking at the inputs, outputs and responsibilities of each.

The simulation

The game and all its logic live here. It owns all the entities that live in the game world, the logic and systems that apply to them. It exports 2 main methods to the game engine.

Tick

tick(camera, ui_commands)

Advances the simulation a single tick. Note the lack of deltatime/dt - it is totally independent of real-world time or tick rates. The caller is free to decide on its timestep (i.e. 20tps in the game, as fast as possible in tests).

The simulation is isolated from the outside world, having no way to directly access the player's input e.g. mouse clicks, keypresses. Instead, it is passed a collection of renderer-agnostic UiCommands and InputEvents on each tick. These are collected by the engine from the backend (via mouse, keyboard, joystick, steering wheel, etc.) in the time between ticks, and processed all at once in the next tick.

An InputEvent represents a raw input by the player, such as a mouse click on an entity. This is different to an UiCommand, which represents a command issued through UI. See below for a subset of the possible inputs in the engine at the time of writing:

pub enum InputEvent {

/// A mouse click in world space

Click(MouseButton, WorldColumn),

/// A selection in world space, dragged between two points

Select(MouseButton, WorldColumn, WorldColumn),

// ...

}

pub enum UiCommand {

/// Turns a debug renderer on or off

ToggleDebugRenderer { identifier: &'static str, enabled: bool },

/// Fills the block region selected by the player with the given block type

FillSelectedTiles(BlockType),

// ...

}

The other input parameter is the camera position. The camera itself lives in the backend, and describes the region of the world that is visible to the player2. Currently this is used to prioritise visible terrain updates over those that are out of view, but may eventually be used for determining the level of detail (LOD) of the simulation, in which entities that are further away are updated at a slower rate or in coarser detail.

A brief summary of ticks responsibilities follow:

- Apply terrain updates3

- Act on

UiCommands - Run entity-component-system (ECS) systems, i.e. the majority of the game logic

- End-of-tick maintenance (applying deferred updates generated by the previous step e.g. insertion/removal of entities)

Render

render(camera, render_target, interpolation, input_events) -> ui_blackboard

Renders a single frame of the current game state, interpolated with the given interpolation value for smooth movement as covered in the previous devlog. This allows for rendering the simulation at 60fps while ticking at only 20tps.

As in tick, the camera input defines the visible area of the world. The simulation is only concerned with rendering entities as opposed to terrain, which is handled by the backend, described below.

The render_target is a backend-specific struct that implements a common interface for rendering entities. This is passed to a special ECS rendering system4, which in standard ECS fashion iterates over all the entities with a transform (defining position) and render component (defining colour/size/sprite/texture) to produce a list of renderable entities that the backend can draw however it wants.

The input_events as described above are resolved to their appropriate selections (e.g. select a single entity, select a region of blocks in the world) by the ECS input system. This system is also run during rendering for increased responsiveness; the player's selection will appear to them in the next frame (in ~16ms at 60fps) rather than the next tick (in ~50ms at 20tps).

Rendering returns a struct that I've termed the UI blackboard. It is simply a structured dump of game data, gathered each frame from the simulation and passed to the UI to be displayed. I've gone with this push approach, where the simulation gathers data about itself and pushes it to the UI, rather than pulling (the UI queries information from the simulation as it wants) because it helps maintain the conceptual split between the game and its rendering.

The responsibilities of render can be summarised as below:

- Process raw input events from the player

- Render entities

- Render debug shapes (coloured lines and simple shapes to aid debugging)

- Gather game data for the UI

The backend

The backend represents the renderer and is the interface between the player and the game. In this section I will be describing the current concrete implementation that uses SDL2 for window management and event handling, ImGui for the UI, and OpenGL for drawing.

Tick

tick()

Unbelievable, a simple function signature. Like the simulation, the backend is ticked 20 times per second. Its main responsibility is ticking the camera, moving it around based on user input. The camera's position is treated as any other game object, updated with a fixed timestep but interpolated every frame to result in smooth motion.

Although the world's terrain data lives in the simulation, its mesh (visual representation) lives in the backend. When terrain is modified, the backend needs to regenerate the mesh to represent the change. As an optimisation, only the meshes of terrain regions that are visible to the player are updated.

A nice, simple summary for a nice, simple method follows:

- Update the camera's position

- Regenerate meshes for visible, modified world regions

Render

render(simulation, interpolation) -> ui_commands

This is where everything the player sees is rendered, i.e. the terrain, the simulation, and the UI. I'll start with the summary this time:

- Clear the window with a solid colour

- Calculate projection and view matrices from the camera's position

- Render the terrain

- Render the simulation (as above), gathering the UI blackboard

- Render the UI, gathering UI commands

The terrain and entities are rendered at their correct Z coordinate5 (i.e. the vertical axis in world space) with depth testing, so the render order technically doesn't matter in this case - terrain overhangs will occlude entities as expected.

As mentioned above, rendering the simulation produces a UI blackboard. This holds information such as the current selected entity, details about them (e.g. hunger, current navigation destination, current activity), and is passed to the UI to be rendered in a simple immediate-mode GUI. Commands issued from the UI are collected and passed to the simulation on the next tick.

Consume window events

consume_events() -> outcome

This is where the backend interacts with the operating system, receiving SDL2 events for clicks/window resizes/keystrokes that are translated into InputEvents. It is called by the engine at the beginning of each game loop iteration, before any ticking or rendering. In nearly all cases these window events are handled internally in the backend or simulation, but there are a couple that affect the engine itself - for example, clicking the window close button or sending a SIGINT to the process.

After handling the queued window events for this frame, an outcome is returned to the engine. This can take one of the following self-explanatory values:

- Continue - keep running the game

- Exit - exit the game

- Restart - destroy the simulation and start a new one

The engine

The main job of the engine is simply to call these methods on the backend and simulation, passing data between them. The core of it can be described in Rust-like pseudocode:

// the core game loop

loop {

// handle window events

if backend.consume_events() {

// either exit or restart the game

}

// tick and render as the fixed timestep game loop dictates

for action in game_loop.actions() {

match action {

FrameAction::Tick => {

simulation.tick(&camera, ui_commands);

backend.tick();

}

FrameAction::Render { interpolation } => {

ui_commands += backend.render(&mut simulation, interpolation);

}

}

}

}

Summary

I'm sure you're clamouring to know how exactly the game is initialised and restarts itself, but this post has gone on long enough. In the future I will address this along with the concept of presets, which describe how to setup the game in terms of world generation, entity population, etc for different scenarios such as development, testing, and gameplay.

-

I use a "lite" no-op renderer implementation that runs the game for 30 seconds as part of the CI test suite without rendering, which can help find low-hanging

panics. It skips the fixed timestep game loop to run as fast as the CPU can manage. ↩ -

If you're interested, what I refer to as the camera here actually exists in code as the

WorldViewer. It is backend-agnostic and tracks the player's 3D viewport of the world. This differs to theCamera, which is the SDL2 backend's game object representing the player's moving perspective in the world. To keep the needless complexity as low as possible, I will continue to misleadingly refer to theWorldVieweras the camera. ↩ -

This involves applying occlusion (lighting) updates and posting terrain modifications to the world loader thread pool. This topic will be covered in detail in a future post, and with luck I will update this footnote with a link when the time comes. ↩

-

"Special" here refers to how the system is run every frame during rendering, whereas other normal systems are run in

tickas part of the game logic. ↩ -

Actually, this isn't completely true. If everything was rendered at their true Z coordinate in this practically infinite world we would eventually run into floating point inaccuracies with large values that lead to subtle rendering bugs. For example, you may be surprised to find that

5000.0_f32 + 0.0001_f32 = 5000.0. To solve this issue early on, all Z coordinates are uniformly scaled down in order to be close to zero (where the possible floating point values are most densely distributed) while still being correct relative to one another. The same will eventually be needed for X/Y coordinates6 as well. ↩ -

Who knew you could have footnotes inside footnotes? This is the cause of Minecraft's Far Lands and is pretty much a non-issue for most players, as problems only occur at ridiculous distances from the world origin. ↩